Night Sky Stacker

An exploratory project where I attempt to implement my own astrophotography image stacker that corrects for the earth's rotation, allowing us to boost the image signal by combining multiple frames.

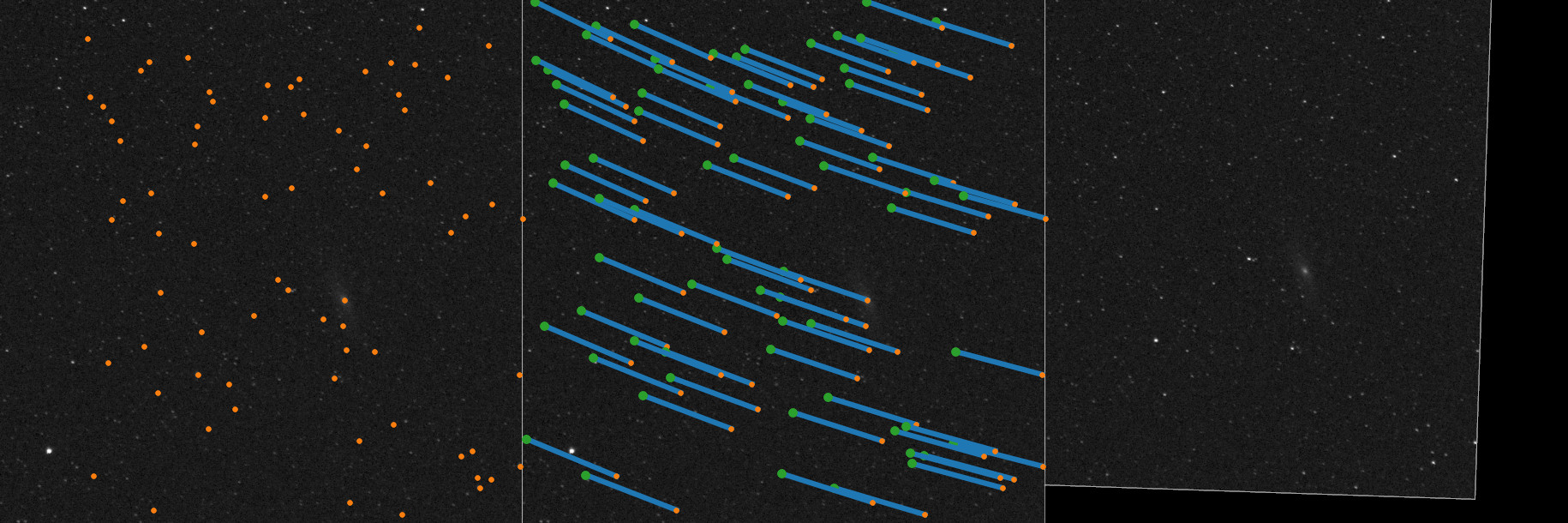

Parts of the implementation is shown below. For each of the 70 frames in 2021-12-25 Andromeda (left), stars are detected, registered, and coordinates transformed (mid) to account for the Earth's rotation (right).

You can click the animation loop to pause.

Background

This was supposed to be a small test to see if I could detect stars using a blob-detection algorithm I stumbled across. Turns out, to my delight, that it can! It did, however, end up snowballing into a project.

I primarily use DeepSkyStacker when stacking astrophotographies. It is a huge pain to keep a working Windows VM ready for the sole purpose of running the software, alas, I find the results better than the competition. DeepySkyStacker's technical documentation vaguely hints at their implementation, but fortunately links to the papers that inspired them. These papers have also been the source of my inspiration for this project, but I did end up deviating quite significantly in some key areas to better fit my goal.

- F. Valdes et al. FOCAS Automatic Catalog Matching Algorithms

- M. Marszalek et al. Pattern Matching with Differential Voting and Median Transformation Derivation

While I will continue using it DeepSkyStacker, it seems that my implementation here is capable of at least simpler stacks should my relationship with windows further degrade, which is nice.

Implementation

The source code has not yet been provided as it is unfit for any 3rd party human to see. This was supposed to be a small star detection test only, but as the project grew, so did the Jupyter Notebook it lives in.

1 Star Detection

The Laplacian of Gaussian algorithm is what got me started. I love how elegant it is. It achieves blob (star) detection by representing the data at multiple scales which, when normalized, allows for the detection of blobs of various sizes, manifesting as local minima in the constructed scale-space volume.

1.1 Scale-Space Representation

I won't repeat what Wikipedia already explains well, but in essence, the scale-space representation is constructed by convolving the data with Gaussian kernels at various standard deviations , then applying the Laplacian operator to each slice in the resulting volume.

An example of the resulting scale-space volume is shown in the figure below. What's interesting, and quite useful, is that there exists a relationship between and the blob radius given by

which conveniently lets us target a range of blob radii. The aforementioned Laplacian operator produces a strong negative result for blobs that fall into one of the chosen scales, here inverted as white spots.

I recommend reading the works of T. Lindeberg for a proper exploration of scale-space theory.

- T. Lindeberg. Scale-Space Theory in Computer Vision

- T. Lindeberg. Discrete Scale-Space Theory and the Scale-Space Primal Sketch

1.2 Finding Local Minima

Once we got our scale-space volume, which includes built-in noise suppression from the Gaussian kernels, we define a star to be any point that has a lower value than its 26 neighbors, aka a 3D local minima.

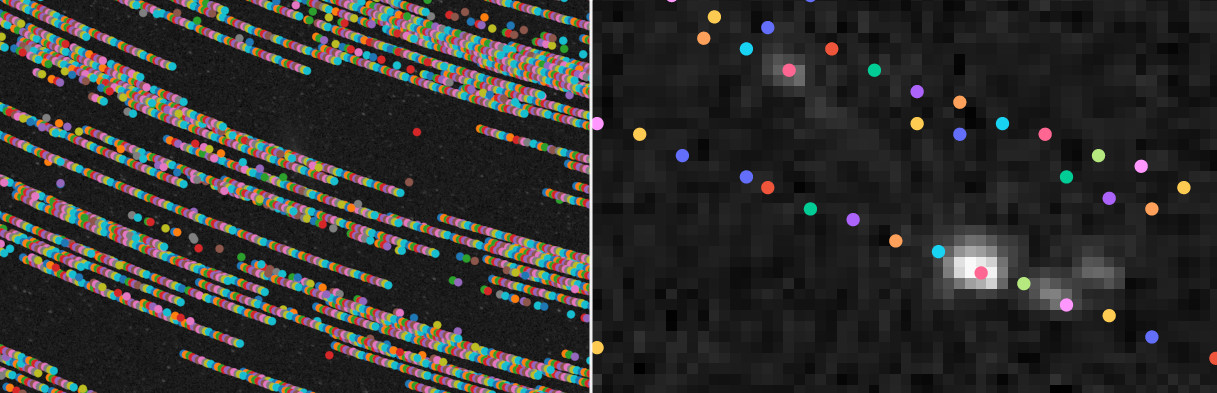

The figure below shows the brightest 50 stars detected in each of the 70 frames individually, full image (left) and zoomed in (right). The streaks indicate reliable detection in both space and time.

2 Point-Cloud Registration

Assuming there is significant overlap in detected stars between frames, we now want to find the transform that best fits the coordinates in a frame to another , or a Point-Cloud Registration. These can get complex, but our case is simple as a rigid transformation with translation and rotation is sufficient.

2.1 Iterative Closest Point

After much faffing about with all kinds of fancy algorithms I found that Iterative Closest Point (ICP), which is comparatively simple, did what I wanted reliably. It finds, for each step, the closest distances between points in and , then solves the least squares problem to find the best transformation that fits them.

One problem is, however, that the ICP assumes that all the points in will have a match in , which is not at all the case for us here as there is no guarantee that all stars detected between the two frames are the same. This will result in the ICP never properly converging and must be dealt with.

If we assume that most, but not all, stars are the same between and , we filter outlier distances in each iteration before fitting using Median Absolute Deviation (MAD), a robust statistic. Given by

where is the distance array, we can remove all outliers in each step, letting the ICP converge on a set of stars that exists in both frames and , leading to a far better fit than if all stars were included.

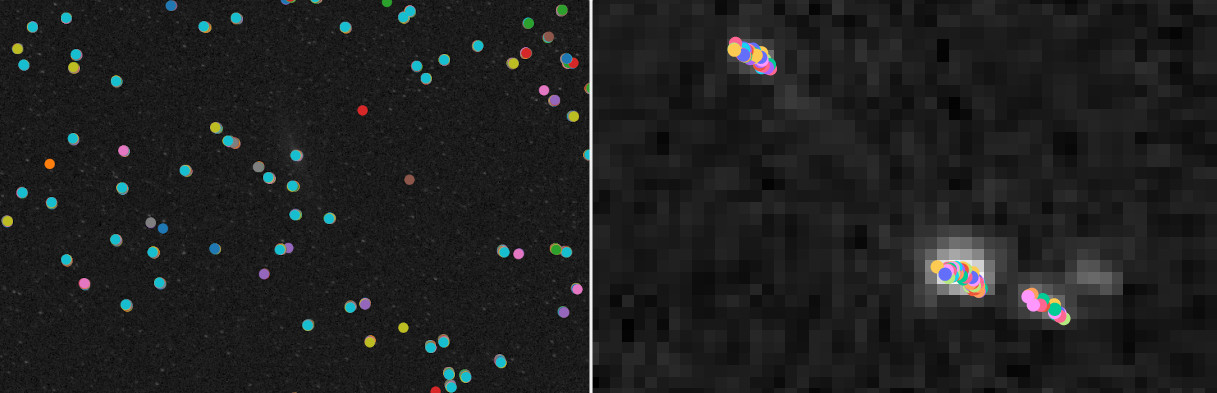

The figure below shows the same image as above (1.2), but where the same points have been fitted to the coordinates of the stars in the middle-most frame (30), superimposed onto the raw image.

It was observed that the fit is gradually worse closer to the edges, introducing a drift of a few pixels. This is likely the lens warping slightly at the edges, a common artifact.

3 Coordinate Transform

We now got the individual transformation matrices per frame, and simple matrix multiplication can be utilized to find the new coordinates [x', y'] for each original pixel [x, y] as a linear transformation. Pixels that fall outside the original frame matrix shape are discarded, and vacant cells are left empty.

Below is a visualization of the transformation. Stars are detected (orange dots) in the raw image, then fitted the reference stars (green dots) using ICP. The output transform is represented by the blue lines. Applying the same transform to each pixel in the raw image gives us the result shown to the right.

Stacking

Once all 70 frames have been aligned they can be combined to draw out faint signals and suppress noise. I've implemented Kappa-Sigma Clipping, a simple but reliable stacking algorithm that for each iteration rejects information per pixel that falls outside the range

where is the average, the standard deviation, and a multiplier.

Result

This project was a treat to work on, and I'm quite happy with the results. Without patting myself too hard on the back, my DIY stacking implementation turned out much better than I anticipated. I can actually use this.

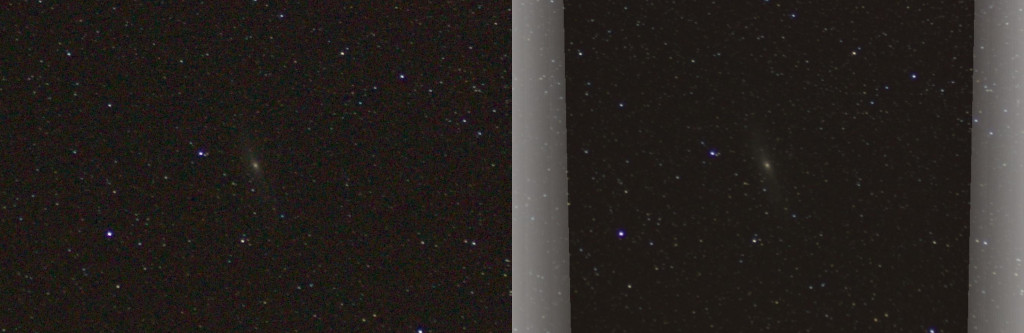

The figure below compares a crop of frame 30 (left) to all 70 frames stacked (right) by the implementation described in this project. All three (RGB) channels have been aligned. The white areas seen on the right are expected edge effects from the transformation.

Below is the same comparison, but zoomed in to highlight the difference in SNR. Faint stars that are effectively invisible in a single frame can clearly be seen in the stacked result.

To Do

- Step 1.2 is hilariously slow, and should be implemented as a C-module.

- Implement and package as a contained CLI.